Removing Superfluous FunctionalityAfter you have identified and addressed any obvious bottlenecks that have transparent changes, you can also use APD to gather a list of features that are intrinsically expensive. Cutting the fat from an application is more common in adopted projects (for example, when you want to integrate a free Web log or Web mail system into a large application) than it is in projects that are completely home-grown, although even in the latter case, you occasionally need to remove bloat (for example, if you need to repurpose the application into a higher-traffic role). There are two ways to go about culling features. You can systematically go through a product's feature list and remove those you do not want or need. (I like to think of this as top-down culling.) Or you can profile the code, identify features that are expensive, and then decide whether you want or need them (bottom-up culling). Top-down culling certainly has an advantage: It ensures that you do a thorough job of removing all the features you do not want. The bottom-up methodology has some benefits as well:

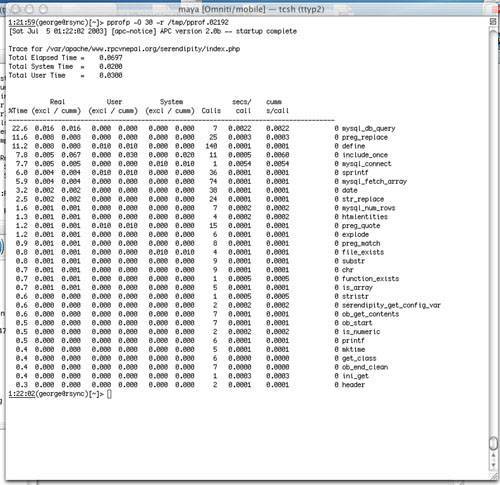

In general, I prefer using the bottom-up method when I am trying to gut a third-party application for use in a production setting, where I do not have a specific list of features I want to remove but am simply trying to improve its performance as much as necessary. Let's return to the Serendipity example. You can look for bloat by sorting a trace by inclusive times. Figure 18.7 shows a new trace (after the optimizations you made earlier), sorted by exclusive real time. In this trace, two things jump out: the define() functions and the preg_replace() calls. Figure 18.7. A postoptimization profile.

In general, I think it is unwise to make any statements about the efficiency of define(). The usual alternative to using define() is to utilize a global variable. Global variable declarations are part of the language syntax (as opposed to define(), which is a function), so the overhead of their declaration is not as easily visible through APD. The solution I would recommend is to implement constants by using const class constants. If you are running a compiler cache, these will be cached in the class definition, so they will not need to be reinstantiated on every request. The preg_replace() calls demand more attention. By using a call tree (so you can be certain to find the instances of preg_replace() that are actually being called), you can narrow down the majority of the occurrences to this function: function serendipity_emoticate($str) {

global $serendipity;

foreach ($serendipity["smiles"] as $key => $value) {

$str = preg_replace("/([\t\ ]?)".preg_quote($key,"/").

"([\t\ \!\.\)]?)/m", "$1<img src=\"$value\" />$2", $str);

}

return $str;

}

where $serendipity['smiles'] is defined as $serendipity["smiles"] =

array(":'(" => $serendipity["serendipityHTTPPath"]."pixel/cry_smile.gif",

":-)" => $serendipity["serendipityHTTPPath"]."pixel/regular_smile.gif",

":-O" => $serendipity["serendipityHTTPPath"]."pixel/embaressed_smile.gif",

":O" => $serendipity["serendipityHTTPPath"]."pixel/embaressed_smile.gif",

":-(" => $serendipity["serendipityHTTPPath"]."pixel/sad_smile.gif",

":(" => $serendipity["serendipityHTTPPath"]."pixel/sad_smile.gif",

":)" => $serendipity["serendipityHTTPPath"]."pixel/regular_smile.gif",

"8-)" => $serendipity["serendipityHTTPPath"]."pixel/shades_smile.gif",

":-D" => $serendipity["serendipityHTTPPath"]."pixel/teeth_smile.gif",

":D" => $serendipity["serendipityHTTPPath"]."pixel/teeth_smile.gif",

"8)" => $serendipity["serendipityHTTPPath"]."pixel/shades_smile.gif",

":-P" => $serendipity["serendipityHTTPPath"]."pixel/tounge_smile.gif",

";-)" => $serendipity["serendipityHTTPPath"]."pixel/wink_smile.gif",

";)" => $serendipity["serendipityHTTPPath"]."pixel/wink_smile.gif",

":P" => $serendipity["serendipityHTTPPath"]."pixel/tounge_smile.gif",

);

and here is the function that actually applies the markup, substituting images for the emoticons and allowing other shortcut markups: function serendipity_markup_text($str, $entry_id = 0) {

global $serendipity;

$ret = $str;

$ret = str_replace('\_', chr(1), $ret);

$ret = preg_replace('/#([[:alnum:]]+?)#/','&\1;',$ret);

$ret = preg_replace('/\b_([\S ]+?)_\b/','<u>\1</u>',$ret);

$ret = str_replace(chr(1), '\_', $ret);

//bold

$ret = str_replace('\*',chr(1),$ret);

$ret = str_replace('**',chr(2),$ret);

$ret = preg_replace('/(\S)\*(\S)/','\1' . chr(1) . '\2',$ret);

$ret = preg_replace('/\B\*([^*]+)\*\B/','<strong>\1</strong>',$ret);

$ret = str_replace(chr(2),'**',$ret);

$ret = str_replace(chr(1),'\*',$ret);

// monospace font

$ret = str_replace('\%',chr(1),$ret);

$ret = preg_replace_callback('/%([\S ]+?)%/', 'serendipity_format_tt', $ret);

$ret = str_replace(chr(1),'%',$ret) ;

$ret = preg_replace('/\|([0-9a-fA-F]+?)\|([\S ]+?)\|/',

'<font color="\1">\2</font>',$ret);

$ret = preg_replace('/\^([[:alnum:]]+?)\^/','<sup>\1</sup>',$ret);

$ret = preg_replace('/\@([[:alnum:]]+?)\@/','<sub>\1</sub>',$ret);

$ret = preg_replace('/([\\\])([*#_|^@%])/','\2', $ret);

if ($serendipity['track_exits']) {

$serendipity['encodeExitsCallback_entry_id'] = $entry_id;

$ret = preg_replace_callback(

"#<a href=(\"|')http://([^\"']+)(\"|')#im",

'serendipity_encodeExitsCallback',

$ret

);

}

return $ret;

}

The first function, serendipity_emoticate(), goes over a string and replaces each text emoticonsuch as the smiley face :)with a link to an actual picture. This is designed to allow users to enter entries with emoticons in them and have the Web log software automatically beautify them. This is done on entry display, which allows users to re-theme their Web logs (including changing emoticons) without having to manually edit all their entries. Because there are 15 default emoticons, preg_replace() is run 15 times for every Web log entry displayed. The second function, serendipity_markup_text(), implements certain common text typesetting conventions. This phrase: *hello* is replaced with this: <strong>hello</strong> Other similar replacements are made as well. Again, this is performed at display time so that you can add new text markups later without having to manually alter existing entries. This function runs nine preg_replace() and eight str_replace() calls on every entry. Although these features are certainly neat, they can become expensive as traffic increases. Even with a single small entry, these calls constitute almost 15% of the script's runtime. On my personal Web log, the speed increases I have garnered so far are already more than the log will probably ever need. But if you were adapting this to be a service to users on a high-traffic Web site, removing this overhead might be critical. You have two choices for reducing the impact of these calls. The first is to simply remove them altogether. Emoticon support can be implemented with a JavaScript entry editor that knows ahead of time what the emoticons are and lets the user select from a menu. The text markup can also be removed, requiring users to write their text markup in HTML. A second choice is to retain both of the functions but apply them to entries before they are saved so that the overhead is experienced only when the entry is created. Both of these methods remove the ability to change markups after the fact without modifying existing entries, which means you should only consider removing them if you need to. |